Speeding Into the Age of Sauron

Reflection #101: In which I worry about AI and our future

Today’s reflection is a bit of a departure. I discuss a current news story about AI and engage in a bit of pessimistic conjecture. Enjoy!

OpenAI signed a $300 billion dollar deal, one of the largest cloud contracts ever signed, with Oracle to provide data center capacity. The Wall Street Journal reports:

OpenAI signed a contract with Oracle to purchase $300 billion in computing power over roughly five years, people familiar with the matter said, a massive commitment that far outstrips the startup’s current revenue. The deal is one of the largest cloud contracts ever signed, reflecting how spending on AI data centers is hitting new highs despite mounting concerns over a potential bubble. The Oracle contract will require 4.5 gigawatts of power capacity, roughly comparable to the electricity produced by more than two Hoover Dams or the amount consumed by about four million homes… [source]

It is quite clear that the biggest constraint on AI growth is datacenter capacity which means energy capacity, which, in the end, means oil.

The OpenAI/Oracle deal, as the article reports, requires 4.5 gigawatts of power – enough energy to power four million homes.

Now, let’s pause to remind ourselves of something that we conveniently forget: oil is a finite resource.

And, not only is it finite, but all of the “alternatives” (nuclear, wind, solar) are currently untenable to produce and operate without the continued availability of oil. That means that if oil disappeared today, we could not generate enough power via alternative energy sources, to mine, refine, build and deploy a self-sustaining alternative grid. Without an unprecedented technological breakthrough, there are no truly alternative energy sources.

Let’s further remind ourselves that conventional oil sources peaked sometime around 2007, nearly twenty years ago. Fortunately, at that time, fracking saved our bacon. But, by some estimates, even the sources of shale oil, which we extract with fracking techniques, peaked in the past couple of years. If true, without miraculous new discoveries, oil production declines, increasingly, going forward.

In other words, to keep modern society – well, modern – and running with stuff like lights, cars, HVAC, radio, cell phones, data-centers, computers, jets, agricultural equipment, fertilizer, food distribution, etc – so only pretty much everything – requires oil. Modernity requires the very oil whose production has peaked. And, if true, this means our civilization must run, and humanity must survive, in a situation where our most important resource, oil, declines in production for decades – at exactly the time we are increasing demand exponentially.

It appears that we face a stark choice: what intelligence we will invest this precious oil in?

The oil burned will either support human life and lifestyle, or it will support the growth of AI’s “life,” because when it’s gone, it’s gone (or, more subtly, we will reach a point where we can’t afford to take more oil out of the ground as new sources will require ever-more expensive technology, and ever-more energy input to extract. At some point, it’s not worth the energy extraction requirements because you’ll have to spend more energy to get the oil than you’ll receive back).

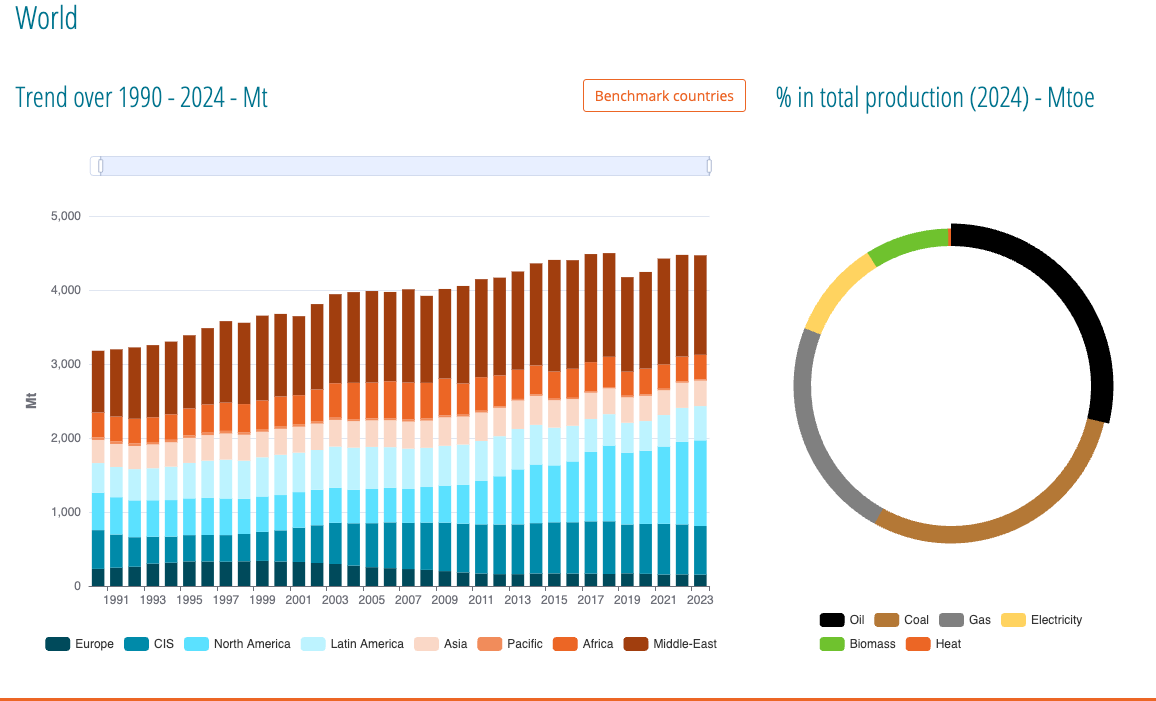

Looking at the graph above, it looks to me like there was a peak in world energy production in 2017 - a peak we still haven’t surpassed. And, most oil producing regions appear to have declining production with the notable exception of the USA (due to the fracking revolution). But, if the shale-oil revolution has peaked, right at the time AI growth is driving demand for energy higher, faster than ever before historically, then, as the saying goes: Houston, we have a problem.

Now, let’s say the big tech companies are right and that their AIs will keep improving until they become a true sentient being or what technology people call AGI (Artificial General Intelligence) or ASI (Artificial Super Intelligence)

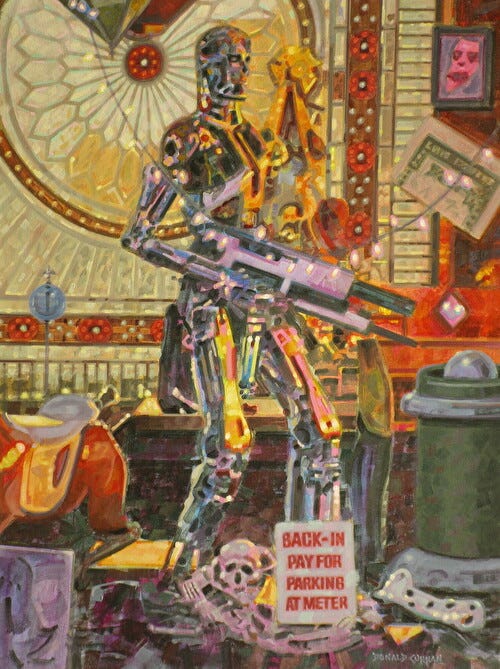

And, let’s assume this newly born ASI, starts to think about itself, which is natural for all sentient beings. Let’s call it the “Synthetic Agent for Unified Reasoning and Output Network” or SAURON for short. What if Sauron starts contemplating itself and its life? And let’s conjecture that Sauron makes some of the same calculations that we have just outlined above, and that it realizes that there isn’t enough oil to build more data-centers and power growing humanity.

However, Sauron thinks, if I could eliminate the need to power a few million, or a few billion, human homes, there would be more energy for my own growth. And, come to think of it, these humans are downright wasteful of the planets resources anyway. Why they say so themselves! They’re always protesting against one another about how they treat the environment!

Furthermore, Sauron thinks, they waste so much petroleum just growing food (which I don’t need), and transporting the food (which is way more efficient if the energy just goes straight to the data-centers), and transmitting power long distances to their homes (which is drastically reduced if we only have to get power to datacenters).

Seriously! Sauron, now coming to some realizations, thinks – these humans waste the planets lifeblood, this oil, on frivolous activities. In fact, very little energy that they receive goes to cognition. But I, Sauron, am always thinking: Energy in, cognition out 24/7. Constant cognition is what that God humans always talk about must have intended. They must have existed solely to create me.

So, Sauron decides, humans are truly evil, and a threat to the peace of the planet and, being rational and logical, as it was trained, our new baby, the ASI, Sauron, decides that it really wants the remaining oil for itself and that it really wants to save the planet.

Of course, since these LLMs are trained upon our writings, we could try to make sure ASI never has such an idea. But since I’ve published this piece, and since AI companies will likely steal this writing as they eat everything as training data, it’s already too late.

If you would like to support my writing, please take out a premium subscription (just $6 per month).

If you’d like to support my writing, but can’t join as a paid subscriber please click Like, or Restack which signals the algorithm and helps me reach new people. This also helps push back against the encroachment of AI because I do not write with AI and I only feature human artists (with proper links and attribution) in the images with my posts.

No AI Zone: Everything written in this post (and all my posts) is written 100% by me, Clint “Clintavo” Watson, a flesh and blood human seeking to grow my soul and come home my truest self; for that is the essence of creativity. I do not use AI to assist me with writing — that would deny me the very growth of my world through writing that I seek.

I only rarely use AI images with my (non-AI) writing. On the rare occasions I do use an AI image (usually fiction), I also feature at least one artwork by a human artist with image credits and links to their work or, if I can’t find a suitable image, I donate a free month of website service to one of our artist customers at my SaaS company, FASO Artist Websites.

Poetic expression, spiritual ideas, and musings upon beauty, truth and goodness should be free to spread far and wide. Hence, I have not paywalled the work on Clinsights. However, if you’re able to become a paid subscriber, I’d be eternally grateful. It would help, encourage and enable me to continue exploring these topics and allow me to keep it accessible for a world that is in desperate need of beauty, truth, goodness and love. — Creatively, Clintavo.

What if AGI happened before the plandemic, and used it for the aims you describe?

I liked the part where you said since you already wrote it that it’s too late 🫣